The Structured Output Agent: An Architecture for Reliability

The Structured Output Agent: An Architecture for Reliable JSON, AST, and API Calls

Large Language Models are masters of unstructured text, but modern software systems run on structured data. The moment you need an LLM to generate a JSON object, populate an API call, or create a code structure like an Abstract Syntax Tree (AST), you encounter one of the biggest challenges in production AI: reliability.

The critical "why": Simply adding "Please format your response as a valid JSON object" to your prompt is a recipe for disaster. This "prompt-and-pray" approach is fragile. It produces inconsistent keys, incorrect data types, and often includes conversational fluff that breaks downstream parsers. A production system cannot tolerate this unpredictability. At ActiveWizards, we engineer systems that guarantee structured output not by hoping, but by architectural design. This article lays out a robust, multi-layered architecture for building an agent that reliably produces structured data.

The Architectural Pillars of Reliable Output

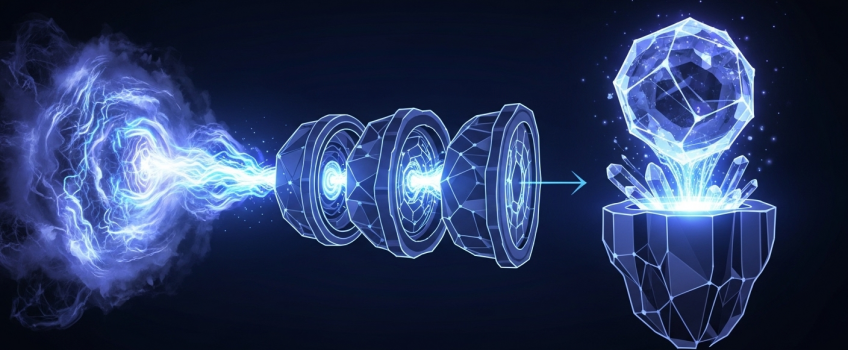

A reliable structured output system is not a single LLM call. It is a stateful process built on three pillars: a clear contract, a robust enforcement mechanism, and a self-correction loop for when things inevitably go wrong.

- Define the Contract: Use a typed schema to define exactly what the structured output should look like. This is your source of truth.

- Enforce the Contract: Leverage the LLM's native tool-use or function-calling capabilities to force it to adhere to the schema.

- Validate and Self-Correct: Implement a final validation step and a retry loop that feeds errors back to the LLM, allowing it to correct its own mistakes.

Pillar 1: Defining the Contract with Pydantic

Hope is not a strategy. We must explicitly define our desired output schema. The best tool for this in the Python ecosystem is Pydantic. By creating a Pydantic `BaseModel`, we create a "contract" that is both human-readable and machine-parseable. This model serves as the ground truth for our validation layer.

from pydantic import BaseModel, Field

from typing import List

class UserProfile(BaseModel):

"""A structured representation of a user's profile."""

user_id: int = Field(..., description="The unique numeric ID of the user.")

username: str = Field(..., description="The user's public username.")

interests: List[str] = Field(

default_factory=list,

description="A list of the user's declared interests."

)

is_premium_member: bool = Field(..., description="Flag indicating premium status.")

Pillar 2: Enforcing the Contract with Function Calling

Modern LLMs from providers like OpenAI, Google, and Anthropic have built-in "function calling" or "tool use" capabilities. This is the primary enforcement mechanism. Instead of asking the LLM to just write JSON in its response, we describe a "tool" that it can call, and the parameters of that tool are defined by our Pydantic schema. This coerces the LLM's output into the desired structure far more reliably than a simple text prompt.

Pillar 3: The Self-Correction Loop for Ultimate Reliability

Even with function calling, an LLM can occasionally produce an output that fails validation (e.g., passing a string where an integer is required). A production system must handle this gracefully. The solution is a validation and retry loop.

Diagram 1: A self-correction loop for guaranteeing structured output.

This loop is implemented with a simple but powerful programming pattern. We wrap the LLM call and Pydantic validation in a `try...except` block within a loop. If Pydantic raises a `ValidationError`, we catch it, format the error message, and feed it back into the next LLM call as context, asking it to fix its mistake.

# Conceptual implementation of a self-correction loop

from pydantic import ValidationError

def get_structured_output_with_retry(prompt: str, max_retries: int = 3):

for i in range(max_retries):

try:

# 1. Call the LLM with the prompt and the Pydantic-derived tool schema

# llm_output = llm.invoke(prompt, tools=[UserProfile])

llm_output = ... # Placeholder for the raw LLM JSON output

# 2. Attempt to validate the output against our Pydantic model

validated_output = UserProfile.model_validate(llm_output)

print("Validation successful!")

return validated_output # Success, exit the loop

except ValidationError as e:

print(f"Validation failed on attempt {i+1}. Retrying...")

# 3. If validation fails, update the prompt with the error context

prompt += f"\n\nPrevious attempt failed. Please correct the output. Error: {e}"

raise Exception("Failed to generate valid structured output after multiple retries.")

This architecture is not just for simple JSON. It is the key to reliably generating complex, domain-specific structures. For example, a key task in advanced AI is generating code, often represented as an Abstract Syntax Tree (AST). You can define a series of Pydantic models that represent the valid nodes of your target AST (e.g., `IfStatement`, `FunctionCall`, `VariableAssignment`). By applying the same Define, Enforce, and Self-Correct architecture, you can build an agent that reliably generates syntactically correct code structures, a task that is nearly impossible with prompt engineering alone.

Production-Ready Checklist

Before deploying a structured output agent, consider these points:

- Retry Limits & Cost: Have you implemented a maximum number of retries? An agent stuck in a correction loop can lead to runaway LLM costs.

- Observability: Are you logging and tracing the entire correction process? When an agent fails after 3 retries, you need to see the full history of attempts and validation errors to debug the root cause.

- Schema Versioning: How will you handle changes to your Pydantic schemas? Your application logic needs to be robust to different versions of the structured data it receives.

- Error Handling: What happens after the max retries are exceeded? The system should fail gracefully and alert a human, rather than passing a null or invalid object downstream.

The ActiveWizards Advantage: Engineering for Reliability

The ability to reliably get structured data from an unstructured model is a cornerstone of production AI. It separates toy projects from enterprise-grade systems. This requires more than prompt engineering; it demands a robust, fault-tolerant software architecture.

At ActiveWizards, we specialize in building these reliable systems. We apply rigorous engineering principles to our AI development, ensuring that our agents are not just intelligent, but also predictable, robust, and ready for the demands of your business processes.

From Unstructured Text to Guaranteed Structure

Tired of unpredictable LLM outputs breaking your applications? Our experts can architect and build a robust structured output agent that delivers reliable, validated data every time.

Comments (0)

Add a new comment: