The Definitive CI/CD Pipeline for AI Agents: A Tutorial

The Definitive CI/CD Pipeline for AI Agents: A Tutorial with GitHub Actions, Docker, and Kubernetes

At ActiveWizards, we engineer AI systems for production, and that means applying the same rigorous software engineering discipline to agents as any other critical service. This article provides a definitive, practical tutorial for building a CI/CD pipeline for an AI agent using a modern tech stack: GitHub Actions for orchestration, Docker for containerization, and Kubernetes for scalable deployment. This is the blueprint for automating your agent deployments and achieving production-grade reliability.

The "Why": The Unique Challenges of CI/CD for Agents

CI/CD for AI agents introduces unique challenges beyond a standard web application:

- Configuration as Code: Prompts, model choices (e.g., `gpt-4-turbo`), and temperature settings are part of your agent's "source code." They must be version-controlled and deployed alongside the application code.

- Secret Management: Agents rely on sensitive API keys (OpenAI, Anthropic, etc.). These must be managed securely, not hardcoded.

- Dependency Complexity: The AI ecosystem moves fast. Pinning versions of libraries like `langchain`, `crewai`, and `openai` is critical to prevent unexpected behavior changes from breaking your agent.

- Resource-Intensive Workloads: Unlike a simple CRUD API, agents can be memory and CPU intensive. The deployment infrastructure must be prepared to handle these loads.

The Architectural Blueprint: From Commit to Deployment

Our pipeline automates every step from a `git push` on the main branch to a live, running agent in a Kubernetes cluster. Each stage is a distinct, automated step that ensures consistency and quality.

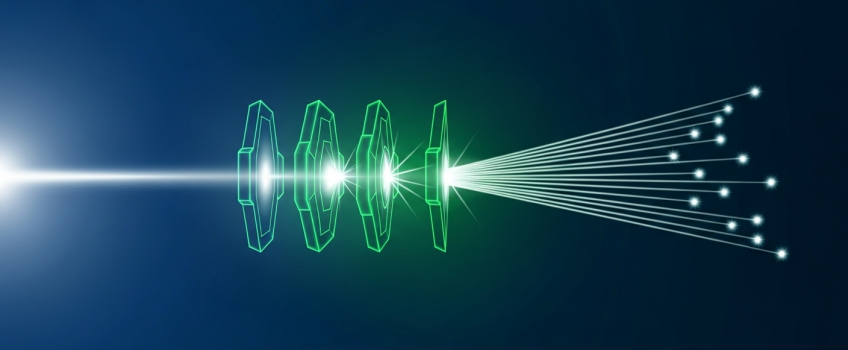

Diagram 1: The end-to-end CI/CD workflow for an AI agent.

A Practical Tutorial: Deploying a FastAPI Agent

We'll use a standard FastAPI agent as our example. The core components we need to deploy are a `Dockerfile` to package the agent and a Kubernetes manifest to describe how to run it.

Step 1: The `Dockerfile` for the Agent

This file defines the environment for our agent. We copy our application code, install dependencies from `requirements.txt`, and define the command to run the ASGI server.

# Use an official Python runtime as a parent image

FROM python:3.11-slim

# Set the working directory

WORKDIR /app

# Copy the dependency file and install dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy the application code

COPY ./app /app

# Expose the port the app runs on

EXPOSE 8000

# Run the application

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]

Step 2: The GitHub Actions Workflow (`.github/workflows/ci-cd.yml`)

This is the heart of our automation. The YAML file defines the triggers and jobs. We use repository secrets to securely store credentials like `DOCKERHUB_TOKEN` and `KUBE_CONFIG_DATA`.

name: AI Agent CI/CD Pipeline

on:

push:

branches: [ "main" ]

jobs:

build-and-deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Log in to Docker Hub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Build and push Docker image

uses: docker/build-push-action@v5

with:

context: .

push: true

tags: yourusername/my-agent-service:latest

- name="Set up Kubeconfig"

run: |

mkdir -p $HOME/.kube

echo "${{ secrets.KUBE_CONFIG_DATA }}" | base64 --decode > $HOME/.kube/config

- name: Deploy to Kubernetes

run: kubectl apply -f k8s/deployment.yml

Step 3: The Kubernetes Deployment Manifest (`k8s/deployment.yml`)

This file tells Kubernetes how to run our agent. It defines the `Deployment` (which manages our pods) and the `Service` (which exposes it to the network). This is where we securely inject our `OPENAI_API_KEY`.

Expert Insight: Kubernetes Secrets are Essential

Never hardcode API keys. Create a Kubernetes secret using `kubectl create secret generic agent-secrets --from-literal=OPENAI_API_KEY='your-key-here'` and then reference it in your deployment manifest. This is the standard, secure way to manage credentials in a production environment.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-agent-deployment

spec:

replicas: 2 # Run two instances for availability

selector:

matchLabels:

app: my-agent

template:

metadata:

labels:

app: my-agent

spec:

containers:

- name: my-agent-container

image: yourusername/my-agent-service:latest # The image we just pushed

ports:

- containerPort: 8000

env:

- name: OPENAI_API_KEY

valueFrom:

secretKeyRef:

name: agent-secrets # Reference the K8s secret

key: OPENAI_API_KEY

---

apiVersion: v1

kind: Service

metadata:

name: my-agent-service

spec:

selector:

app: my-agent

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer # Expose the service externally

Production-Grade Pipeline Checklist

This tutorial provides a solid foundation. For a truly production-grade system, you must go further.

- Agent Evaluation in CI: The most critical step missing is testing. Your CI pipeline must run an evaluation suite against the agent. This involves sending a set of test prompts and asserting that the agent's responses meet quality, format, and correctness standards using a framework like LangSmith or TruLens.

- Staging Environments: Never deploy directly from `main` to production. The pipeline should first deploy to a `staging` environment for final integration testing before a manual approval promotes it to `production`.

- Infrastructure as Code (IaC): Manage your Kubernetes secrets and other infrastructure using a tool like Terraform or Pulumi. This makes your entire setup, including security configurations, version-controlled and reproducible.

- Image Tagging and Versioning: Using the `:latest` tag is convenient for tutorials but dangerous in production. Your pipeline should tag images with the Git commit hash (e.g., `yourusername/my-agent-service:${{ github.sha }}`) for absolute traceability.

Conclusion: From Ad-Hoc to Automated

Treating your AI agent as a first-class software application with a dedicated CI/CD pipeline is the defining characteristic of a professional LLMOps practice. This automated, version-controlled, and tested approach is what transforms a clever agent into a reliable and scalable enterprise asset.

This end-to-end engineering discipline is at the heart of what we do at ActiveWizards. We build not just intelligent agents, but the robust, automated systems that allow them to thrive in demanding production environments.

Automate and Scale Your AI Deployments

Ready to move your AI agents to a professional, automated CI/CD pipeline? Our experts specialize in building robust LLMOps infrastructure that ensures your agents are reliable, scalable, and deployed with confidence.

Comments (0)

Add a new comment: