Migrating to Kafka from MQ/Tibco: A Success Roadmap

Migrating to Kafka from Legacy Messaging Systems (MQ, Tibco): A Strategic Roadmap for Success

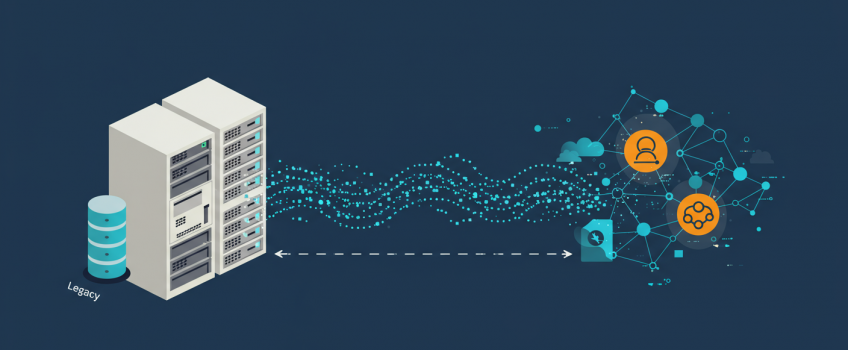

Many enterprises rely on traditional messaging systems like IBM MQ, RabbitMQ, or TIBCO EMS for critical inter-application communication. While these systems have served well, the rise of real-time data streaming, microservices architectures, and the need for scalable, fault-tolerant data pipelines often necessitate a move to modern platforms like Apache Kafka. Migrating from these legacy systems to Kafka is not just a technical lift-and-shift; it's a strategic undertaking that requires careful planning, architectural considerations, and a phased approach to ensure minimal disruption and maximum benefit.

This article provides a strategic roadmap for successfully migrating from legacy messaging systems to Apache Kafka. We'll cover key drivers for migration, compare Kafka with traditional MQs, outline a phased migration strategy, discuss common challenges, and highlight best practices. At ActiveWizards, we guide organizations through these complex modernization journeys, leveraging our deep expertise in both legacy systems and advanced Kafka architectures to engineer intelligent, future-proof data solutions.

Why Migrate from Legacy MQ to Kafka? Key Drivers

Organizations choose to migrate to Kafka for several compelling reasons:

- Scalability & Throughput: Kafka is designed for horizontal scalability and can handle significantly higher message volumes (millions of messages per second) compared to many traditional MQs.

- Data Streaming Capabilities: Kafka is more than just a message queue; it's a distributed streaming platform enabling real-time stream processing, event sourcing, and complex event processing.

- Durability & Fault Tolerance: Kafka's distributed log architecture with replication provides strong durability and high availability.

- Decoupling & Flexibility: Kafka acts as a central nervous system, decoupling producers and consumers effectively, and supporting diverse data formats and integration patterns.

- Ecosystem & Community: Kafka has a vast and vibrant ecosystem (Kafka Connect, Kafka Streams, ksqlDB, schema registry) and strong community support.

- Cost Optimization: In some cases, migrating to open-source Kafka can lead to lower licensing and operational costs compared to commercial MQ solutions.

- Modernization for Microservices & Cloud-Native: Kafka is well-suited for microservices architectures and cloud-native deployments.

Kafka vs. Traditional Message Queues: A Paradigm Shift

Understanding the fundamental differences is key to a successful migration:

| Feature | Traditional MQ (e.g., IBM MQ, RabbitMQ) | Apache Kafka |

|---|---|---|

| Primary Model | Point-to-point (queues), Publish/Subscribe (topics, often with message deletion after consumption by all subscribers) | Distributed, append-only commit log (topics with durable storage and consumer-managed offsets) |

| Message Retention | Typically short-lived; messages deleted after consumption. | Long-term retention configurable per topic; messages persist even after consumption. Enables replays. |

| Consumer Model | Broker often manages message state for consumers (e.g., destructive reads from queues). | Consumers manage their own offsets (pointers to their position in the log). Non-destructive reads. Multiple consumer groups can read the same data independently. |

| Scalability | Varies; often scales vertically or with complex clustering. | Designed for massive horizontal scalability by adding brokers and partitioning topics. |

| Ordering | Guaranteed within a queue. | Guaranteed ordering per partition. Global ordering requires single partition or careful application design. |

| Use Cases | Traditional enterprise messaging, task queues, request/reply. | Real-time data streaming, event sourcing, log aggregation, stream processing, microservice integration. |

Migrating to Kafka is not just about replacing queues with topics; it often involves re-thinking message consumption patterns and leveraging Kafka's stream-centric capabilities.

A Phased Strategic Roadmap for Migration

A successful migration is typically executed in phases to manage risk and complexity.

Diagram 1: Phased Roadmap for Migrating to Kafka from Legacy MQ.

Phase 1: Discovery & Planning

- Identify Use Cases & Drivers:Clearly articulate why you are migrating and what business outcomes you expect.

- Assess Legacy System: Inventory existing queues/topics, message formats, data volumes, producing/consuming applications, SLAs, and integration points. Understand current pain points.

- Define Kafka Architecture:Design your target Kafka architecture, including cluster sizing, topic design (partitioning, replication), security, HA/DR strategy, and monitoring.

- Develop Migration Plan: Create a detailed plan with timelines, resource allocation, risk assessment, and rollback strategies.

- Skill Up Your Team: Invest in Kafka training for developers and operations staff.

Phase 2: Proof of Concept (PoC) & Pilot

- Select a Non-Critical Use Case: Choose a representative but lower-risk application or data flow for the initial pilot.

- Set Up Kafka Dev/Test Environment: Build a small-scale Kafka cluster for development and testing.

- Develop Connectors/Adapters: If existing applications can't be modified to use Kafka clients directly, build or configure adapters (e.g., using Kafka Connect JMS Source/Sink connectors, Spring Cloud Stream binders).

- Test & Validate: Thoroughly test functionality, performance, data integrity, and failure scenarios for the pilot application. Gather learnings.

Phase 3: Coexistence & Incremental Migration (The "Strangler Fig" Pattern)

This is often the longest and most critical phase. The goal is to gradually shift traffic and applications to Kafka while the legacy system remains operational.

- Deploy Kafka Production Cluster: Set up your production-grade Kafka cluster based on the architecture defined in Phase 1.

- Bridge Legacy MQ to Kafka:Implement a bridge to flow messages from the legacy MQ to Kafka, or vice-versa, or both.

- Kafka Connect: Use Kafka Connect with appropriate source connectors (e.g., JMS Source Connector, IBM MQ Source Connector) to pull data from legacy queues/topics into Kafka topics. Sink connectors can send data from Kafka back to legacy MQs if needed for a period.

- Custom Adapters: Develop custom applications that consume from legacy MQ and produce to Kafka, or vice-versa.

- Migrate Applications Incrementally:

- Producers First: Modify or adapt producer applications to write to Kafka topics (either directly or via the bridge sending to Kafka). Consumers can initially continue reading from the legacy MQ (with data bridged from Kafka if producers write to Kafka first).

- Then Consumers: Modify or adapt consumer applications to read from Kafka topics.

- Monitor Both Systems: Closely monitor message flow, latency, and error rates in both the legacy MQ and Kafka during this phase.

Gradually "strangle" the legacy system by incrementally moving functionality to the new Kafka-based system. Bridging is key here. As more applications use Kafka directly, the reliance on the legacy MQ and the bridge diminishes, eventually allowing for its decommissioning. This minimizes risk compared to a "big bang" migration.

Phase 4: Full Cutover & Decommissioning

- Migrate Remaining Applications: Complete the migration of all targeted applications and data flows to Kafka.

- Verify Data Integrity & Performance: Conduct thorough end-to-end testing to ensure all systems are functioning correctly with Kafka as the messaging backbone.

- Redirect All Traffic: Ensure all relevant message traffic is flowing through Kafka and no new messages are being written to the legacy MQ.

- Decommission Legacy MQ System: Once confident, carefully decommission the legacy messaging system. This includes shutting down servers, archiving data (if needed), and removing old configurations.

Phase 5: Optimization & Evolution

- Fine-tune Kafka Performance: Now that Kafka is handling full production load, optimize producer, broker, and consumer configurations based on observed behavior.

- Implement Advanced Monitoring & Alerting: Ensure comprehensive monitoring is in place for the Kafka cluster and related applications.

- Explore Kafka Ecosystem: Leverage Kafka Streams, ksqlDB, Schema Registry, and other ecosystem components to build advanced streaming applications and enhance data governance.

- Regularly Review & Evolve: Periodically review your Kafka architecture and usage patterns to ensure it continues to meet business needs and incorporate new Kafka features.

Common Challenges & Mitigation Strategies

| Challenge | Description | Mitigation Strategy |

|---|---|---|

| Semantic Differences | MQ paradigms (e.g., destructive reads, message selectors) vs. Kafka's log model. | Educate teams. Re-architect consumer logic for Kafka's offset management. Use Kafka Streams for filtering/routing. |

| Data Transformation & Format Changes | Legacy systems might use proprietary formats or require data transformation. | Use Kafka Connect Single Message Transforms (SMTs), custom converters, or dedicated ETL/streaming jobs. Schema Registry for schema evolution. |

| Transactionality & Exactly-Once | Replicating transactional behavior from legacy MQs can be complex. | Leverage Kafka's Exactly-Once Semantics (EOS) if needed. Carefully design application logic for idempotency or two-phase commits if interacting with external transactional systems. |

| Application Rewrites vs. Adapters | Deciding whether to rewrite applications to use Kafka clients natively or use adapters/connectors. | Balance long-term benefits of native integration vs. short-term speed of adapters. Prioritize critical/high-volume apps for native integration. |

| Monitoring & Operational Shift | Different monitoring metrics and operational procedures for Kafka. | Invest in Kafka-specific monitoring tools and training for operations teams. |

| Cultural Resistance & Skill Gaps | Teams may be accustomed to legacy systems and unfamiliar with Kafka. | Provide comprehensive training, foster a collaborative environment, highlight benefits of modernization. Engage expert consultants. |

Conclusion: Modernizing Your Data Backbone with Confidence

Migrating from legacy messaging systems like MQ or Tibco to Apache Kafka is a significant but often transformative step towards a more scalable, resilient, and real-time data architecture. By adopting a strategic, phased roadmap—encompassing thorough planning, pilot projects, incremental migration with coexistence, and careful decommissioning—organizations can navigate this complex journey successfully. Understanding the paradigm shifts between traditional MQs and Kafka's stream-centric model is crucial for re-architecting applications to fully leverage Kafka's capabilities.

While the path involves challenges, the benefits of a modernized messaging and streaming platform built on Kafka are substantial. ActiveWizards possesses the deep expertise required to guide enterprises through every phase of this migration, from initial assessment and architectural design to implementation, optimization, and operationalization, ensuring a smooth transition and a powerful new data backbone.

Modernize Your Messaging with ActiveWizards: Migrate to Kafka Seamlessly

Ready to move beyond the limitations of your legacy MQ system? ActiveWizards provides end-to-end services for migrating to Apache Kafka, helping you unlock scalability, real-time streaming, and a future-proof data architecture. Let our experts chart your course to success.

Comments (0)

Add a new comment: